For those who do not know what is the Azure Kinect… basically it is a developer kit with advanced AI sensors that provide sophisticated computer vision and speech models.

Topics covered in this post:

- Hardware

- Views RGB

- SDKs

1 – Hardware

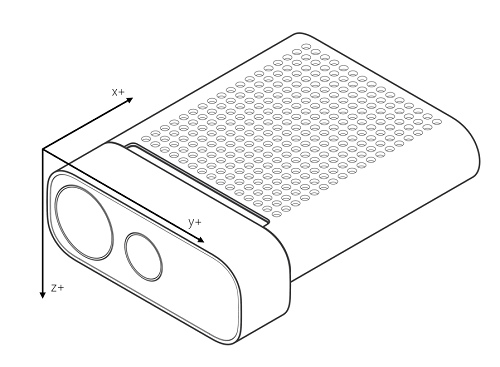

This is the hardware presented by Microsoft. As you can see in the picture this Kinect which only weights 440g has:

- RGB Camera:

- OV12A10 12MP CMOS sensor rolling shutter sensor.

- USB video class-compatible and can be used without the Sensor SDK (Check at the bottom of this post to understand what is the SDK).

- Color space : BT .601 full range [0..255]

- Depth Camera:

- 1-Megapixel Time-of-Flight (ToF) imaging chip enabling higher modulation frequencies and depth precision.

- Two NIR Laser diodes enabling near and wide FoV depth modes.

- Depth provided outside of indicated range depending on object reflectivity.

- Implements the Amplitude Modulated Continous Wave (AMCW) ToF principle. Casts modulated ilumination in the near IR (NIR) spectrum onto the scene. It then records an indirect measurment of the time ir takes the light to travel from the camera to the scene and back.

- IR emitters.

- Motion Sensor (IMU):

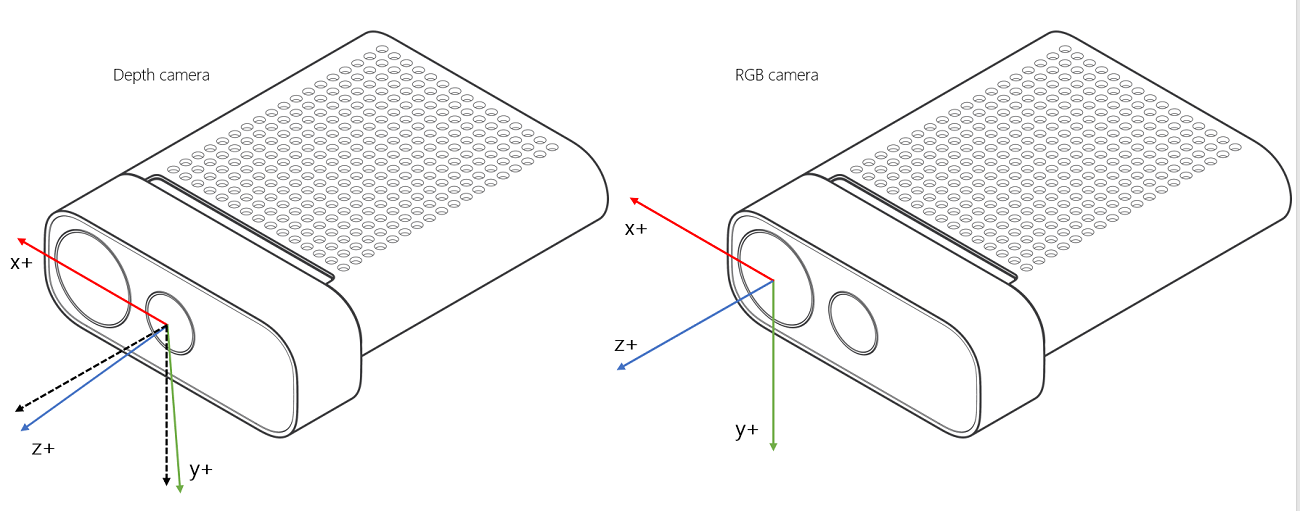

- LSM6DSMUS includes accelerometer and a gyroscope sampled at 1.6 kHz, reporting to the host at 208 Hz. Origin [0,0,0] both coordinate systems are right-handed.

- Microphone array:

- 7 microphone circular array identifies as a standard USB audio class 2.0 device.

- Sensitivity: –22 dBFS (94 dB SPL, 1 kHz)

- Signal to noise ratio > 65 dB

- Acoustic overload point: 116 dB

2 – Field of View RGB / Depth

The best way understand the field-of-view, and the angle that the sensors “see” are thought this diagram.

- This diagram shows the RGB Camera 1. in 4:3 mode from a distance of 2000mm.

- Regarding the Depth Camera views (is tilted 6 degrees downwards of the color camera), both 2. and 3. Its important to understand that this camera transmits modulated IR images to the host PC. Then the depth engine software converts the raw signal into depth maps. As described in the image, the supported modes are:

- NFOV (Narrow field-of-view): This modes are ideal for scenes with smaller extents in X and Y but larger in Z. One of the illuminators in this mode is aligned with the depth camera case, no tilted.

- WFOV (Wide field-of-view): This modes are ideal for scenes with larger extents in X and Y but smaller in Z. The illuminator used in this view is tilted an additional 1.3 degrees downward relative to the depth camera.

- Depth camera supports 2×2 binning modes (at the cost of lowering image resolution), to extend the Z-range in comparison to the corresponding unbinned modes we described before.

Note: When depth is in NFOV mode, the RGB camera has better pixel overlap in 4:3 than in 16:9 resolutions.

3 – SDK

The K4A DK consists on the following SDKs:

- Sensor SDK (is primarily a C API, in this link it also covers the C++ wrapper):

- Note: Can provide color images in the BGRA pixel format. The host CPU is used to convert from MJPEG images received from device.

- Features included:

- Depth and RGB Camera control and access.

- Motion sensor.

- Syncronized Depth-RGB camera streaming with configurable delay between cameras.

- Device sincronization

- Camera frame meta-data

- Device calibration data access

- Tools

- Azure Kinect Viewer

- Azure Kinect Recorder

- Firmware update tool

- Body Tracking SDK (primarily a C API):

- Features

- Body Segmentation

- Anatomically correct skeleton for each partial or full body in FOV.

- Unique identity for each body.

- Can track bodies over time.

- Tools

- Viewer tool to track bodies in 3

Leave a Reply